Workflow of Data Engineering Project on AWS

27 Mar 2023 - Ravi Pandit

Figure 1: Architecture Diagram

- Amazon S3: Amazon S3 (Simple Storage Service) is a highly scalable and secure cloud storage service provided by Amazon Web Services (AWS). It allows users to store and retrieve any amount of data from anywhere on the web, with high durability, availability, and performance. S3 provides a simple web interface, as well as an API, to manage and access the data stored in it. Users can create buckets (i.e., containers for objects) in S3 and upload files (i.e., objects) to them.

- Crawler (to extract all the schema & information): AWS provides a managed service called AWS Glue Crawler, which is a fully-managed data crawler that automatically discovers, categorizes, and registers metadata about your data assets in AWS.

- Amazon Athena: Amazon Athena is an interactive query service provided by AWS that allows users to analyze data in Amazon S3 using standard SQL queries. It is a serverless service, which means that users do not need to manage any infrastructure or perform any database administration tasks. Athena automatically scales query processing based on the amount of data being queried, so users can run ad-hoc queries on large datasets with ease.

- AWS Glue: AWS Glue is a fully-managed extract, transform, and load (ETL) service provided by AWS that allows users to move data between different data stores and data lakes. It provides a serverless environment for running ETL jobs, which means that users do not need to manage any infrastructure or perform any database administration tasks.

- Amazon Redshift: Amazon Redshift is a fully-managed cloud data warehouse provided by AWS that allows users to store and analyze large amounts of structured and semi-structured data. It is designed to be fast, scalable, and cost-effective, making it a popular choice for big data analytics.

- VPC: VPCs provide a flexible and secure way for users to launch and run their AWS resources in a private and isolated network environment.

2. Dataset:

For this project, covid-19 dataset will be used, provided by the aws. Structure of dataset and information related to dataset can be studied through this blog:

Only selective datasets are downloaded as shown in the figure:

|

|

Figure 2: Dataset

3. Storing downloaded Dataset in Amazon S3:

Figure 3: Storing the downloaded Dataset in Amazon S3

4. Analyzing the data (Schema Crawling and Table Building):

Crawler is created for each and every csv data files where crawler crawls on all the dataset stored in S3 and extract all the schema and information.

Figure 4 : Crawler

After completion of crawler task, Amazon Athena is used to analyze each and every data (studying the datasets) extracted by crawler.

Figure 5: Amazon Athena

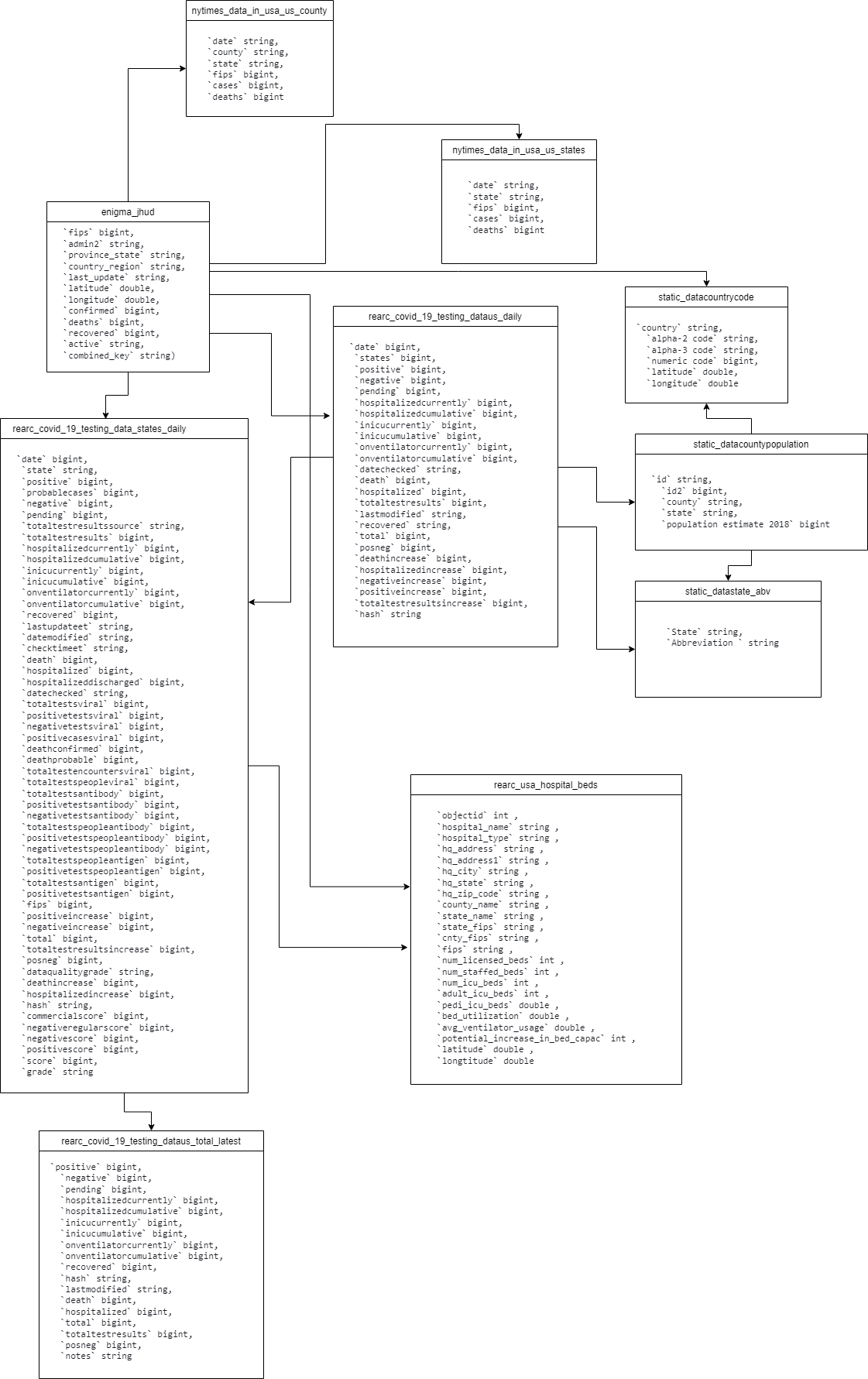

5. Building Data Models:

After analyzing the data, to understand the overall flow of the Data, Data Model is created.

Here, fips is primary key in most of the table which can also be studied from the data source of the dataset.

6. Schema:

Here, the fact table is factCovid and there are 3 different dimension table (i.e dimHospital, dimRegion, dimDate). Most of the information is redundant across most of the table in above Data model, thus schema is created because it is more simpler to analyze data in data warehouse when there is less table.

7. Use of Jupyter Notebook:

- Libraries:

import boto3

import pandas as pd

from io import StringIO

- Access Case:

- Connect to Athena and Query Data

- Function:

This function basically take boto3 object and dictionary and run query in Athena and store the output in S3 i.e in staging_dir

- Query Response:

Similarly, this process is repeated for all other 9 tables created using crawler shown in figure 4.

- Fixing errors’ found in table:

- Transformation (ETL job in python):

Changing Dimension Table(Date) property as explained in figure of schema:

- Save results to S3:

Here, specify the S3 bucket name in place of bucket.

Similarly, this process is repeated for all other 9 tables created using crawler shown in figure 4.

- Extracting Schema out of the DataFrame:

Note: These schema will be needed while creating table in Amazon Redshift.

8. Creating a Redshift Namespace (Cluster)

9. AWS Glue Job

In AWS, AWS Glue Jobs are used for the ETL (Extract, Transform, Load) process to move data from one location to another. AWS Glue is a fully managed ETL service that makes it easy to move data between different data stores and data lakes.

AWS Glue Jobs are used to run ETL scripts and perform data transformations on data stored in various data sources such as Amazon S3, Amazon RDS, Amazon DynamoDB, Amazon Redshift, and more. AWS Glue Jobs can be written in Python or Scala, and they can be run on a serverless infrastructure. This means that AWS Glue Jobs can scale automatically and can handle any amount of data processing.

Table is created in Amazon Redshift with the help create table command and copy command is used to copy the dimension table and fact table from S3 to Redshift. These all process is done by creating a job in AWS Glue Job.

Once the AWS Glue Jobs’ task is completed, data can be queried and viewed in Redshift query editor as shown in figure below:

Finally, this data can be used by Data Analyst and Data Scientist to derive insights and decision making.

Code for Nepal would like to thank DataCamp Donates for providing Ravi, and several other fellows access to DataCamp, to learn and grow.